使用FFmpeg实现软件转码监控视频

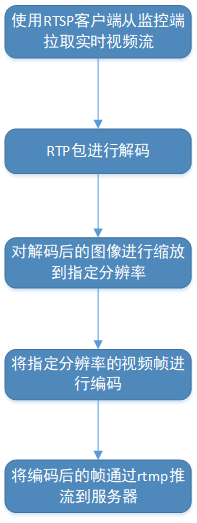

实时监控视频的码率通常在5M以上码流,如果做手机端的实时预览,对带宽是很大的考验,所以很有必要先做降分辨率,然后降码率的处理。所有的处理在后台服务器进行,大致的业务流程如下:

海康监控摄像头输出的分辨率是:2560*1440 ,ffmpeg提供的方法能很好的完成这个流程,其实网上有很多例子,但都不全,去看ffmpeg源码提供的例子来实现是很好的办法,比方ffmpeg-4.1的例子代码在\ffmpeg-4.1\doc\examples,参考封装了一个类来做解码、缩放和编码的流程,代码如下图:

/*

created:2019/04/02

*/

#ifndef RTP_RESAMPLE_TASK_H__

#define RTP_RESAMPLE_TASK_H__

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

extern "C" {

#include <libavcodec/avcodec.h>

#include <libavutil/avutil.h>

#include <libavutil/opt.h>

#include <libavutil/imgutils.h>

#include <libavutil/base64.h>

#include <libavutil/imgutils.h>

#include <libavutil/parseutils.h>

#include <libswscale/swscale.h>

}

#include <stdio.h>

#include "Task.h"

#include "TimeoutTask.h"

#include "SVector.h"

typedef void(*EncodedCallbackFunc)(void*, char *, int);

typedef struct RtpData

{

char *data;

int length;

}RtpData;

typedef struct DstVideoInfo

{

int width;

int height;

int samplerate;

}DstVideoInfo;

typedef struct SourceVideoInfo{

int payload_type;

char *codec_type;

int freq_hz;//90000

char *sps;

char *pps;

long profile_level_id;

char *media_header;

}SourceVideoInfo;

typedef struct

{

struct AVCodec *codec;// Codec

struct AVCodecContext *context;// Codec Context

AVCodecParserContext *parser;

struct AVFrame *frame;// Frame

AVPacket *pkt;

int frame_count;

int iWidth;

int iHeight;

int comsumedSize;

int skipped_frame;

//for decodec

void* accumulator;

int accumulator_pos;

int accumulator_size;

char *szSourceDecodedSPS;

int sps_len;

char *szSourceDecodedPPS;

int pps_len;

} X264_Decoder_Handler;

typedef struct

{

struct AVCodec *codec;// Codec

struct AVCodecContext *context;// Codec Context

int frame_count;

struct AVFrame *frame;// Frame

AVPacket *pkt;

Bool16 force_idr;

int quality; // [1-31]

int iWidth;

int iHeight;

int comsumedSize;

int got_picture;

char *encodedBuffer;

int encodedBufferSize;

int encodedSize;

char *rawBuffer;

int rawBufferSize;

struct SwsContext *sws_ctx;

char *sps;

int sps_len;

char *pps;

int pps_len;

FILE *fTest;

} X264_Encoder_Handler;

class RTPResampleTask {

public:

//output width, heigh, samplerate

RTPResampleTask(const DstVideoInfo *dstVideoInfo, const SourceVideoInfo *sourceVideoInfo, EncodedCallbackFunc func, void *funcArgs);

~RTPResampleTask();

int resample(const char *inputBuffer, int inputSize, char **outputBuffer, int *outputSize);

char *getResampleSPS(int *sps_len){

*sps_len = encoderHandler.sps_len;

return encoderHandler.sps;

};

char *getResamplePPS(int *pps_len){

*pps_len = encoderHandler.pps_len;

return encoderHandler.pps;

};

enum{

STATE_INIT = -1,

STATE_RUNNING = 0,

STATE_WAITING = 1,

STATE_START_DECODE = 2,

STATE_DONE = 3

};

private:

int initEncoder();

int initDecoder();

void deInitEncoder();

void deInitDecoder();

int decodePacket(const char *inputBuffer, int inputSize);

int encodePacket();

private:

UInt32 fState;

UInt32 kIdleTime;

EncodedCallbackFunc callback;

SVector<RtpData *> reEncodedWaitList;

SourceVideoInfo sourceVideoInfo;

DstVideoInfo dstVideoInfo;

X264_Decoder_Handler decoderHandler;

X264_Encoder_Handler encoderHandler;

void *funcArgs;

};

#endif//RTP_RESAMPLE_TASK_H__

/*

created:2019/04/02

*/

#include "RTPResampleTask.h"

#define INBUF_SIZE 4096

#define H264_RTP_PAYLOAD_SIZE 1320

RTPResampleTask::RTPResampleTask(const DstVideoInfo *dstVideoInfo, const SourceVideoInfo *sourceVideoInfo, EncodedCallbackFunc func , void *funcArgs){

uint8_t szDecodedSPS[128] = { 0 };

this->decoderHandler.sps_len = av_base64_decode((uint8_t*)szDecodedSPS, (const char *)sourceVideoInfo->sps, sizeof(szDecodedSPS));//nSPSLen=24

this->decoderHandler.szSourceDecodedSPS = (char *)malloc(this->decoderHandler.sps_len);

if (this->decoderHandler.szSourceDecodedSPS != NULL){

memcpy(this->decoderHandler.szSourceDecodedSPS, szDecodedSPS, this->decoderHandler.sps_len);

}

uint8_t szDecodedPPS[128] = { 0 };

this->decoderHandler.pps_len = av_base64_decode(( uint8_t*)szDecodedPPS, (const char *)sourceVideoInfo->pps, sizeof(szDecodedPPS));//nPPSLen=4

this->decoderHandler.szSourceDecodedPPS = (char *)malloc(this->decoderHandler.pps_len);

if (this->decoderHandler.szSourceDecodedPPS != NULL){

memcpy(this->decoderHandler.szSourceDecodedPPS, szDecodedPPS, this->decoderHandler.pps_len);

}

this->funcArgs = funcArgs;

kIdleTime = 100;

fState = STATE_INIT;

initEncoder();

initDecoder();

}

RTPResampleTask::~RTPResampleTask(){

deInitEncoder();

deInitDecoder();

}

int RTPResampleTask::initEncoder(){

AVCodecID codec_id = AV_CODEC_ID_H264;

if (codec_id == AV_CODEC_ID_H264){

}

encoderHandler.rawBuffer= NULL;

encoderHandler.rawBufferSize = 0;

encoderHandler.encodedBuffer= NULL;

encoderHandler.encodedBufferSize = 0;

encoderHandler.sws_ctx = NULL;

encoderHandler.pkt = av_packet_alloc();

if (!encoderHandler.pkt) {

printf("encoderHandler.pkt == NULL");

return -1;

}

encoderHandler.codec = avcodec_find_encoder(codec_id); //AV_CODEC_ID_AAC;

if(encoderHandler.codec == NULL ) {

printf("encoderHandler.codec == NULL");

return -1;

}

//创建AVFormatContext结构体

//分配一个AVFormatContext,FFMPEG所有的操作都要通过这个AVFormatContext来进行

encoderHandler.context = avcodec_alloc_context3(encoderHandler.codec);

if(encoderHandler.context == NULL ) {

printf("encoderHandler.context == NULL");

return -1;

}

//设置AVCodecContext编码参数

struct AVCodecContext *c = encoderHandler.context;

avcodec_get_context_defaults3(c, encoderHandler.codec);

c->codec_id = codec_id;

c->bit_rate = 500000;

c->time_base.den = 25; //分母

c->time_base.num = 1; //分子

/* resolution must be a multiple of two */

c->width = 640;

c->height = 480;

/* frames per second */

c->time_base = (AVRational){1, 25};

c->framerate = (AVRational){25, 1};

c->gop_size = 1;//

c->max_b_frames = 0;

//c->rtp_payload_size = H264_RTP_PAYLOAD_SIZE;

c->pix_fmt = AV_PIX_FMT_YUV420P;

c->codec_type = AVMEDIA_TYPE_VIDEO;

//c->flags|= CODEC_FLAG_GLOBAL_HEADER;

//AVOptions的参数

av_opt_set(c->priv_data, "preset", "slow", 0);

av_opt_set(c->priv_data, "preset", "ultrafast", 0);

av_opt_set(c->priv_data, "tune","stillimage,fastdecode,zerolatency",0);

av_opt_set(c->priv_data, "x264opts","crf=26:vbv-maxrate=728:vbv-bufsize=364:keyint=25",0);

encoderHandler.frame = av_frame_alloc();

if(encoderHandler.frame == NULL ) {

printf("encoderHandler.frame == NULL");

return -1;

}

encoderHandler.frame->format = c->pix_fmt;

encoderHandler.frame->width = c->width;

encoderHandler.frame->height = c->height;

avpicture_alloc((AVPicture *)encoderHandler.frame,AV_PIX_FMT_YUV420P, encoderHandler.frame->width, encoderHandler.frame->height); //desW,desH分别为目标分辨率的宽度、高度

/* open it */

int ret = avcodec_open2(encoderHandler.context, encoderHandler.codec , NULL);

if (ret < 0) {

fprintf(stderr, "Could not open codec: %d\n", ret);

return -1;

}

encoderHandler.fTest = fopen("/tmp/test_out.h264", "wb");

if (!encoderHandler.fTest) {

fprintf(stderr, "Could not open /tmp/test_out.h264 \n");

return -1;

}

encoderHandler.sps = NULL;

encoderHandler.sps_len = 0;

encoderHandler.pps = NULL;

encoderHandler.pps_len = 0;

return 0;

}

void RTPResampleTask::deInitEncoder(){

if(encoderHandler.context){

avcodec_free_context(&encoderHandler.context);

encoderHandler.context = NULL;

}

if(encoderHandler.frame){

av_frame_free(&encoderHandler.frame);

encoderHandler.frame = NULL;

}

if (encoderHandler.pkt){

av_packet_free(&encoderHandler.pkt);

encoderHandler.pkt = NULL;

}

if (encoderHandler.sws_ctx){

sws_freeContext(encoderHandler.sws_ctx);

encoderHandler.sws_ctx = NULL;

}

if (encoderHandler.fTest){

fclose(encoderHandler.fTest);

}

if (encoderHandler.sps != NULL){

free(encoderHandler.sps);

}

if (encoderHandler.pps != NULL){

free(encoderHandler.pps);

}

}

int RTPResampleTask::initDecoder(){

AVCodecID codec_id = AV_CODEC_ID_H264;

if (codec_id == AV_CODEC_ID_H264){

}

decoderHandler.pkt = av_packet_alloc();

if (!decoderHandler.pkt) {

printf("decoderHandler.pkt == NULL");

return -1;

}

decoderHandler.codec = avcodec_find_decoder(codec_id); //AV_CODEC_ID_AAC;

if(decoderHandler.codec == NULL )

{

printf("decoderHandler.codec == NULL");

return -1;

}

decoderHandler.parser = av_parser_init(decoderHandler.codec->id);

if (!decoderHandler.parser) {

printf("decoderHandler.parser == NULL");

return -1;

}

//创建AVFormatContext结构体

//分配一个AVFormatContext,FFMPEG所有的操作都要通过这个AVFormatContext来进行

decoderHandler.context = avcodec_alloc_context3(decoderHandler.codec);

if (!decoderHandler.context) {

printf("decoderHandler.context == NULL");

return -1;

}

int sps_pps_len = decoderHandler.sps_len + decoderHandler.pps_len + 6;

unsigned char *szSPSPPS = (unsigned char *)av_mallocz(sps_pps_len + 1);

char spsHeader[4] = {0x00, 0x00,0x00, 0x01};

char ppsHeader[3] = {0x00, 0x00, 0x01};

memcpy(szSPSPPS, spsHeader, sizeof(spsHeader));

memcpy(szSPSPPS + sizeof(spsHeader), decoderHandler.szSourceDecodedSPS, decoderHandler.sps_len);

memcpy((void *)(szSPSPPS + sizeof(spsHeader) + decoderHandler.sps_len), ppsHeader, sizeof(ppsHeader));

memcpy((void *)(szSPSPPS + sizeof(spsHeader) + sizeof(ppsHeader) + decoderHandler.sps_len), decoderHandler.szSourceDecodedPPS, decoderHandler.pps_len);

decoderHandler.context->extradata_size = sps_pps_len;

decoderHandler.context->extradata = (uint8_t*)av_mallocz(decoderHandler.context->extradata_size + AV_INPUT_BUFFER_PADDING_SIZE);

memcpy(decoderHandler.context->extradata, szSPSPPS, sps_pps_len);

decoderHandler.context->time_base.num = 1;

decoderHandler.context->time_base.den = 25;

if (avcodec_open2(decoderHandler.context, decoderHandler.codec, NULL) < 0) {

printf("avcodec_open2 return failed");

return -1;

}

decoderHandler.frame = av_frame_alloc();

if (!decoderHandler.frame) {

printf("decoderHandler.frame is null.");

return -1;

}

printf("initDecoder, width:%d, height:%d", decoderHandler.context->width, decoderHandler.context->height);

return 0;

}

void RTPResampleTask::deInitDecoder(){

if(decoderHandler.frame){

av_frame_free(&decoderHandler.frame);

decoderHandler.frame = NULL;

}

if(decoderHandler.parser){

av_parser_close(decoderHandler.parser);

decoderHandler.parser = NULL;

}

if (decoderHandler.pkt){

av_packet_free(&decoderHandler.pkt);

}

if (decoderHandler.context->extradata != NULL){

av_free(decoderHandler.context->extradata);

}

if(decoderHandler.context){

avcodec_free_context(&decoderHandler.context);

decoderHandler.context = NULL;

}

}

int RTPResampleTask::resample(const char *inputBuffer, int inputSize, char **outputBuffer, int *outputSize){

*outputBuffer = NULL;

*outputSize = 0;

int ret = decodePacket((const char *)inputBuffer, inputSize);

//printf("video resample decodePacket ret:%d outputSize:%d\n", ret, encoderHandler.rawBufferSize);

if (ret == 0){

char *encodeOutputBuffer = NULL;

int encodeOutputSize = 0;

int src_linesize[4], dst_linesize[4];

//scale

if (encoderHandler.sws_ctx == NULL){

encoderHandler.sws_ctx = sws_getContext(decoderHandler.context->width, decoderHandler.context->height, decoderHandler.context->pix_fmt,

encoderHandler.context->width, encoderHandler.context->height, AV_PIX_FMT_YUV420P,

SWS_BILINEAR, NULL, NULL, NULL);

}

sws_scale(encoderHandler.sws_ctx, decoderHandler.frame->data,

decoderHandler.frame->linesize, 0, decoderHandler.context->height, encoderHandler.frame->data, encoderHandler.frame->linesize);

ret = encodePacket();

if (ret != 0){

printf("video resample ret:%d err\n", ret);

return -1;

}

fwrite(encoderHandler.encodedBuffer, 1, encoderHandler.encodedSize, encoderHandler.fTest);

*outputBuffer = encoderHandler.encodedBuffer;

*outputSize = encoderHandler.encodedSize;

int typeIndex = 3;//default 00 00 01

if (encoderHandler.encodedBuffer[3] == 0x01){

typeIndex = 4;

}

if (encoderHandler.encodedBuffer[typeIndex] == 0x67 && encoderHandler.sps == NULL){

printf("video resample has sps:%d \n", encoderHandler.encodedSize);

encoderHandler.sps_len = 0;

int i = 0;

unsigned short find_sps_flag = 0;

while(i++ < encoderHandler.encodedSize){

if ((encoderHandler.encodedBuffer[typeIndex] == 0x00 &&

encoderHandler.encodedBuffer[typeIndex + 1] == 0x00 &&

encoderHandler.encodedBuffer[typeIndex + 2] == 0x01 &&

encoderHandler.encodedBuffer[typeIndex + 3] == 0x68)

){

find_sps_flag = 1;

break;

}

typeIndex++;

}

if (!find_sps_flag){

return 0;

}

find_sps_flag = 0;

encoderHandler.sps_len = typeIndex;

printf("video resample encoderHandler.sps_len:%d \n", encoderHandler.sps_len);

encoderHandler.sps = (char *)malloc(encoderHandler.sps_len);

if (encoderHandler.sps != NULL){

memcpy((void *)encoderHandler.sps, (void *)encoderHandler.encodedBuffer, encoderHandler.sps_len);

}

encoderHandler.pps_len = 0;

typeIndex = encoderHandler.sps_len + 4;

while(i++ < encoderHandler.encodedSize){

if (

(encoderHandler.encodedBuffer[typeIndex] == 0x00 &&

encoderHandler.encodedBuffer[typeIndex +1] == 0x00 &&

encoderHandler.encodedBuffer[typeIndex +2] == 0x01)

){

find_sps_flag = 1;

break;

}

typeIndex++;

encoderHandler.pps_len++;

}

if (!find_sps_flag){

return 0;

}

encoderHandler.pps_len += 4;

printf("video resample encoderHandler.pps_len:%d \n", encoderHandler.pps_len);

encoderHandler.pps = (char *)malloc(encoderHandler.pps_len);

if (encoderHandler.pps != NULL){

memcpy((void *)encoderHandler.pps, &encoderHandler.encodedBuffer[encoderHandler.sps_len], encoderHandler.pps_len);

}

}

return 0;

}

return -1;

}

int RTPResampleTask::decodePacket(const char *inputBuffer, int inputSize){

int ret;

uint8_t *data = ( uint8_t *)inputBuffer;

int data_size = inputSize;

//while (data_size > 0)

{

encoderHandler.rawBufferSize = 0;

av_init_packet(decoderHandler.pkt);

decoderHandler.pkt->dts = AV_NOPTS_VALUE;

decoderHandler.pkt->size = (int)inputSize;

decoderHandler.pkt->data = (uint8_t *)inputBuffer;

if (decoderHandler.pkt->size){

ret = avcodec_send_packet(decoderHandler.context, decoderHandler.pkt);

if (ret < 0) {

fprintf(stderr, "decodePacket Error sending a packet for decoding\n");

return -1;

}

ret = avcodec_receive_frame(decoderHandler.context, decoderHandler.frame);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF){

return -1;

}

else if (ret < 0) {

fprintf(stderr, "decodePacket Error during decoding :%d\n", ret);

return -1;

}

int xsize = avpicture_get_size(decoderHandler.context->pix_fmt, decoderHandler.context->width, decoderHandler.context->height);

//fprintf(stderr, "decodePacket end decoding :%d, width:%d, height:%d\n", xsize, decoderHandler.context->width, decoderHandler.context->height);

encoderHandler.rawBufferSize = xsize;

//

}else{

return -1;

}

}

return 0;

}

int RTPResampleTask::encodePacket(){

#if 0

int size = avpicture_fill((AVPicture*)encoderHandler.frame, (uint8_t*)inputBuffer, AV_PIX_FMT_YUV420P, encoderHandler.context->width, encoderHandler.context->height);

if (size != inputSize){

/* guard */

printf("RTPResampleTask::encodePacket invalid size: %u<>%u", size, inputSize);

return 0;

}

#endif//encoderHandler.frame

int ret = avcodec_send_frame(encoderHandler.context, encoderHandler.frame);

if (ret < 0) {

printf("RTPResampleTask::encodePacket error sending a frame for encoding, ret:%d\n", ret);

return ret;

}

encoderHandler.encodedSize = 0;

while (ret >= 0)

{

ret = avcodec_receive_packet(encoderHandler.context, encoderHandler.pkt);

if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF){

if (encoderHandler.encodedSize > 0){

return 0;

}

return -1;

} else if (ret < 0) {

printf("RTPResampleTask::encodePacket error during encoding\n");

return ret;

}

if (encoderHandler.encodedBuffer == NULL){

encoderHandler.encodedBuffer = (char *)malloc((encoderHandler.context->width*encoderHandler.context->height * 3)/2);

encoderHandler.encodedBufferSize = (encoderHandler.context->width*encoderHandler.context->height * 3)/2;

}

if (encoderHandler.encodedBufferSize < (encoderHandler.pkt->size + encoderHandler.encodedSize)){

encoderHandler.encodedBuffer = (char *)realloc(encoderHandler.encodedBuffer, encoderHandler.pkt->size + encoderHandler.encodedSize);

encoderHandler.encodedBufferSize += encoderHandler.pkt->size + encoderHandler.encodedSize;

}

if (encoderHandler.encodedBuffer){

memcpy((void *)(encoderHandler.encodedBuffer + encoderHandler.encodedSize), encoderHandler.pkt->data, encoderHandler.pkt->size);

}

encoderHandler.encodedSize += encoderHandler.pkt->size;

//printf("RTPResampleTask::encodePacket success ret:%d\n", encoderHandler.pkt->size);

//if (callback != NULL){

//callback(funcArgs, (char*)encoderHandler.pkt->data, encoderHandler.pkt->size);

//}

av_packet_unref(encoderHandler.pkt);

}

//printf("RTPResampleTask::encodePacket success ret:%d\n", encoderHandler.encodedBufferSize);

return 0;

}-------------------广告线---------------

项目、合作,欢迎勾搭,邮箱:promall@qq.com

本文为呱牛笔记原创文章,转载无需和我联系,但请注明来自呱牛笔记 ,it3q.com

请先登录后发表评论

- 最新评论

- 总共0条评论